Page 28 - ITU Journal, ICT Discoveries, Volume 3, No. 1, June 2020 Special issue: The future of video and immersive media

P. 28

ITU Journal: ICT Discoveries, Vol. 3(1), June 2020

In addition, a 3D bounding box (may include an Supporting various representations (e.g., 360

object label) per object can be signaled in a virtual video, immersive video, point clouds) of the

supplemental enhanced information (SEI) message same objects in the streaming solution gives the

[7]. This allows efficient identification, localization, renderer better capabilities to reconstruct the scene

labeling, tracking, and object-based processing. elements adaptively with user motion. For instance,

far objects can be rendered from the virtual 360

4.3 Object-based immersive media platform

video since they do not react to the viewer’s motion.

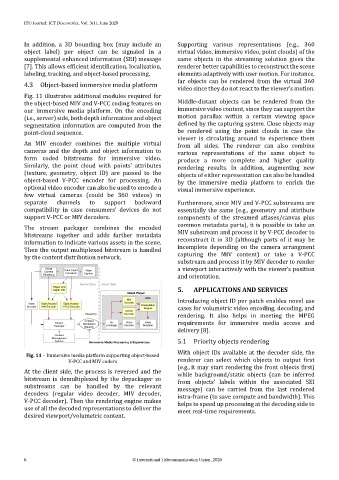

Fig. 11 illustrates additional modules required for

the object-based MIV and V-PCC coding features on Middle-distant objects can be rendered from the

our immersive media platform. On the encoding immersive video content, since they can support the

(i.e., server) side, both depth information and object motion parallax within a certain viewing space

segmentation information are computed from the defined by the capturing system. Close objects may

point-cloud sequence. be rendered using the point clouds in case the

viewer is circulating around to experience them

An MIV encoder combines the multiple virtual from all sides. The renderer can also combine

cameras and the depth and object information to various representations of the same object to

form coded bitstreams for immersive video. produce a more complete and higher quality

Similarly, the point cloud with points’ attributes rendering results. In addition, augmenting new

(texture, geometry, object ID) are passed to the objects of either representation can also be handled

object-based V-PCC encoder for processing. An by the immersive media platform to enrich the

optional video encoder can also be used to encode a visual immersive experience.

few virtual cameras (could be 360 videos) in

separate channels to support backward Furthermore, since MIV and V-PCC substreams are

compatibility in case consumers’ devices do not essentially the same (e.g., geometry and attribute

support V-PCC or MIV decoders. components of the streamed atlases/canvas plus

The stream packager combines the encoded common metadata parts), it is possible to take an

bitstreams together and adds further metadata MIV substream and process it by V-PCC decoder to

information to indicate various assets in the scene. reconstruct it in 3D (although parts of it may be

Then the output multiplexed bitstream is handled incomplete depending on the camera arrangment

by the content distribution network. capturing the MIV content) or take a V-PCC

substream and process it by MIV decoder to render

a viewport interactively with the viewer’s position

and orientation.

5. APPLICATIONS AND SERVICES

Introducing object ID per patch enables novel use

cases for volumetric video encoding, decoding, and

rendering. It also helps in meeting the MPEG

requirements for immersive media access and

delivery [8].

5.1 Priority objects rendering

Fig. 11 – Immersive media platform supporting object-based With object IDs available at the decoder side, the

V-PCC and MIV coders renderer can select which objects to output first

(e.g., it may start rendering the front objects first)

At the client side, the process is reversed and the while background/static objects (can be inferred

bitstream is demultiplexed by the depackager so from objects’ labels within the associated SEI

substreams can be handled by the relevant message) can be carried from the last rendered

decoders (regular video decoder, MIV decoder, intra-frame (to save compute and bandwidth). This

V-PCC decoder). Then the rendering engine makes helps to speed up processing at the decoding side to

use of all the decoded representations to deliver the meet real-time requirements.

desired viewport/volumetric content.

6 © International Telecommunication Union, 2020