Page 368 - Kaleidoscope Academic Conference Proceedings 2024

P. 368

2024 ITU Kaleidoscope Academic Conference

Figure 7 – Activity Diagram for Image and Text Processing

platform. This allows users to perform various actions,

such as opening materials, navigating through lessons, or

closing applications, using simple voice commands. This

deep integration empowers users to control their learning

environment independently, enhancing both accessibility and

ease of use.

Architecture and Workflow: The voice-to-action feature

captures voice commands and processes them to determine

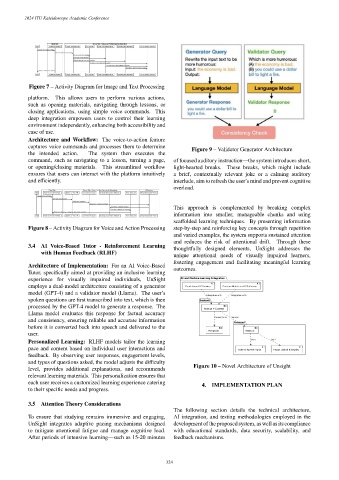

Figure 9 – Validator Generator Architecture

the intended action. The system then executes the

command, such as navigating to a lesson, turning a page, of focused auditory instruction—the system introduces short,

or opening/closing materials. This streamlined workflow light-hearted breaks. These breaks, which might include

ensures that users can interact with the platform intuitively a brief, contextually relevant joke or a calming auditory

and efficiently. interlude, aim to refresh the user’s mind and prevent cognitive

overload.

This approach is complemented by breaking complex

information into smaller, manageable chunks and using

scaffolded learning techniques. By presenting information

Figure 8 – Activity Diagram for Voice and Action Processing step-by-step and reinforcing key concepts through repetition

and varied examples, the system supports sustained attention

and reduces the risk of attentional drift. Through these

3.4 AI Voice-Based Tutor - Reinforcement Learning

thoughtfully designed elements, UnSight addresses the

with Human Feedback (RLHF)

unique attentional needs of visually impaired learners,

fostering engagement and facilitating meaningful learning

Architecture of Implementation: For an AI Voice-Based

outcomes.

Tutor, specifically aimed at providing an inclusive learning

experience for visually impaired individuals, UnSight

employs a dual-model architecture consisting of a generator

model (GPT-4) and a validator model (Llama). The user’s

spoken questions are first transcribed into text, which is then

processed by the GPT-4 model to generate a response. The

Llama model evaluates this response for factual accuracy

and consistency, ensuring reliable and accurate information

before it is converted back into speech and delivered to the

user.

Personalized Learning: RLHF models tailor the learning

pace and content based on individual user interactions and

feedback. By observing user responses, engagement levels,

and types of questions asked, the model adjusts the difficulty

Figure 10 – Novel Architecture of Unsight

level, provides additional explanations, and recommends

relevant learning materials. This personalization ensures that

each user receives a customized learning experience catering

4. IMPLEMENTATION PLAN

to their specific needs and progress.

3.5 Attention Theory Considerations

The following section details the technical architecture,

To ensure that studying remains immersive and engaging, AI integration, and testing methodologies employed in the

UnSight integrates adaptive pacing mechanisms designed development of the proposed system, as well as its compliance

to mitigate attentional fatigue and manage cognitive load. with educational standards, data security, scalability, and

After periods of intensive learning—such as 15-20 minutes feedback mechanisms.

– 324 –