Page 366 - Kaleidoscope Academic Conference Proceedings 2024

P. 366

2024 ITU Kaleidoscope Academic Conference

or low-vision individuals with sighted volunteers through 3.1 Speech and Text Processing

a smartphone app to provide visual assistance for various

tasks. This crowdsourced model has proven both successful Speech Recognition: The system begins by capturing

and scalable, demonstrating the power of community-driven the user’s voice input using the Web Speech API, which

solutions. transcribes spoken language into text. This enables users

to communicate with the application naturally, facilitating an

intuitive and seamless interaction.

Aira[5] is another innovative startup, offering a Emotion Recognition for Optimized Responses: The

subscription service that connects blind users with trained proposed system employs AI models capable of recognizing

agents via smart glasses or a smartphone app. These agents speech tonality and emotional cues using Mel-Frequency

provide real-time assistance for navigation, reading, and other Cepstral Coefficients. By analyzing these cues, the platform

tasks. adapts its responses to better suit the user’s emotional

state, making interactions more empathetic and contextually

relevant. This ensures that users receive supportive feedback

Research also plays a critical role. Studies like those from

tailored to their current mood, enhancing their overall

McKinsey Co., in collaboration with Tilting the Lens [6],

learning experience. [12]

highlight the digital divide and emphasize the importance

Internet Search Integration: For information retrieval, the

of digital accessibility for blind and low-vision consumers.

system employs the Google Custom Search JSON API. It

Their research involves accessible digital surveys, online

processes the transcribed text as a search query and fetches

focus groups, and interviews, underscoring the need for

relevant information from the web, ensuring that responses

inclusive design in digital products

are informative and contextually relevant.[13]

Response Formulation: The system generates a response

One notable study, "Explaining CLIP’s Performance that incorporates both the informational content obtained

Disparities on Data from Blind/Low Vision Users," [7] from the web and the emotional context detected from

evaluates large multi-modal models (LMMs), particularly the user’s speech. This approach provides a personalized

CLIP, and highlights the necessity of datasets that accurately interaction, ensuring that the user receives accurate and

represent blind and low-vision users to improve model contextually appropriate information.[14]

performance. Another study, "ImageAssist: Tools for Text-to-Speech Output: The final response is converted

Enhancing Touchscreen-Based Image Exploration Systems back into speech using advanced text-to-speech technology,

for Blind and Low Vision Users," [8] investigates allowing the system to communicate with the user audibly.

touchscreen-based systems that enrich alt text to offer a This feature is crucial for accessibility, particularly for

deeper understanding of digital images through touch and visually impaired users, enabling them to receive information

audio feedback. in an auditory format.[15]

User Interface (UI): The UI displays the transcription

and processed responses with visual cues indicating which

The paper "Artificial Intelligence for Visually Impaired"

parts of the response have been spoken. This not only

[9] reviews 178 studies on AI applications designed to

aids the helper in following the response but also enhances

aid visually impaired individuals, including deep learning

comprehension. The interface is designed to be intuitive

methods for diagnosing eye diseases and enhancing

and user-friendly, ensuring that visually impaired users can

accessibility technologies . "Towards Assisting Visually

navigate and interact with the platform effectively.[16]

Impaired Individuals: A Review on Current Solutions" [10]

provides a comprehensive overview of assistive technologies

such as AI-driven navigation, object recognition, and

reading assistance . Lastly, "Recent Trends in Computer

Vision-Driven Scene Understanding for Visually Impaired"

systematically maps the current state-of-the-art in computer

vision-based assistive technologies, focusing on scene

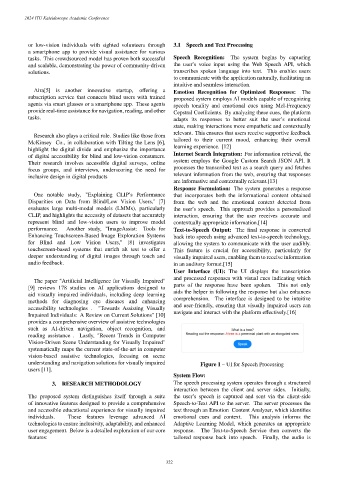

understanding and navigation solutions for visually impaired Figure 1 – UI for Speech Processing

users [11].

System Flow:

3. RESEARCH METHODOLOGY The speech processing system operates through a structured

interaction between the client and server sides. Initially,

The proposed system distinguishes itself through a suite the user’s speech is captured and sent via the client-side

of innovative features designed to provide a comprehensive Speech-to-Text API to the server. The server processes the

and accessible educational experience for visually impaired text through an Emotion Content Analyzer, which identifies

individuals. These features leverage advanced AI emotional cues and context. This analysis informs the

technologies to ensure inclusivity, adaptability, and enhanced Adaptive Learning Model, which generates an appropriate

user engagement. Below is a detailed exploration of our core response. The Text-to-Speech Service then converts the

features: tailored response back into speech. Finally, the audio is

– 322 –