Page 49 - ITU Journal Future and evolving technologies Volume 2 (2021), Issue 4 – AI and machine learning solutions in 5G and future networks

P. 49

ITU Journal on Future and Evolving Technologies, Volume 2 (2021), Issue 4

Fig. 5 – Proposed architecture for audio to video synthesis

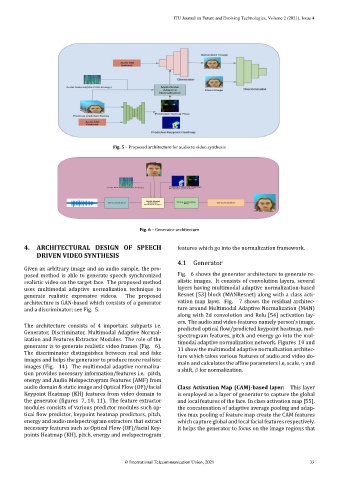

Fig. 6 – Generator architecture

4. ARCHITECTURAL DESIGN OF SPEECH features which go into the normalization framework.

DRIVEN VIDEO SYNTHESIS

4.1 Generator

Given an arbitrary image and an audio sample, the pro‑

posed method is able to generate speech synchronized Fig. 6 shows the generator architecture to generate re‑

realistic video on the target face. The proposed method alistic images. It consists of convolution layers, several

uses multimodal adaptive normalization technique to layers having multimodal adaptive normalization‑based

generate realistic expressive videos. The proposed Resnet [53] block (MANResnet) along with a class acti‑

architecture is GAN‑based which consists of a generator vation map layer. Fig. 7 shows the residual architec‑

and a discriminator; see Fig. 5. ture around Multimodal Adaptive Normalization (MAN)

along with 2d convolution and Relu [54] activation lay‑

ers. The audio and video features namely person’s image,

The architecture consists of 4 important subparts i.e.

Generator, Discriminator, Multimodal Adaptive Normal‑ predicted optical low/predicted keypoint heatmap, mel‑

ization and Features Extractor Modules. The role of the spectrogram features, pitch and energy go into the mul‑

generator is to generate realistic video frames (Fig. 6). timodal adaptive normalization network. Figures 10 and

The discriminator distinguishes between real and fake 11 show the multimodal adaptive normalization architec‑

images and helps the generator to produce more realistic ture which takes various features of audio and video do‑

images (Fig. 14). The multimodal adaptive normaliza‑ main and calculates the af ine parameters i.e, scale, and

a shift, for normalization.

tion provides necessary information/features i.e. pitch,

energy and Audio Melspectrogram Features (AMF) from

audio domain & static image and Optical Flow (OF)/facial Class Activation Map (CAM)‑based layer: This layer

Keypoint Heatmap (KH) features from video domain to is employed as a layer of generator to capture the global

the generator ( igures 7, 10, 11). The feature extractor and local features of the face. In class activation map [55],

modules consists of various predictor modules such op‑ the concatenation of adaptive average pooling and adap‑

tical low predictor, keypoint heatmap predictors, pitch, tive max pooling of feature map create the CAM features

energy and audio melspectrogram extractors that extract which capture global and local facial features respectively.

necessary features such as Optical Flow (OF)/facial Key‑ It helps the generator to focus on the image regions that

points Heatmap (KH), pitch, energy and melspectrogram

© International Telecommunication Union, 2021 33