Page 220 - Kaleidoscope Academic Conference Proceedings 2020

P. 220

2020 ITU Kaleidoscope Academic Conference

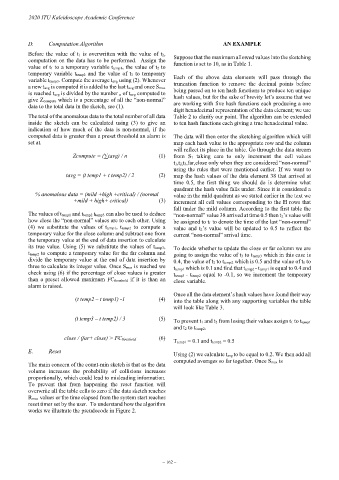

D. Computation Algorithm AN EXAMPLE

Before the value of t 1 is overwritten with the value of t 2,

computation on the data has to be performed. Assign the Suppose that the maximum allowed values into the sketching

function is set to 10, as in Table 1.

value of t1 to a temporary variable ttemp1, the value of t2 to

temporary variable ttemp2 and the value of t3 to temporary

variable ttemp3. Compute the average tavg using (2). Whenever Each of the above data elements will pass through the

a new tavg is computed it is added to the last tavg and once Smax truncation function to remove the decimal points before

is reached tavg is divided by the number n of tavg computed to being passed on to ten hash functions to produce ten unique

give Zcompute which is a percentage of all the “non-normal” hash values, but for the sake of brevity let’s assume that we

data to the total data in the sketch, see (1). are working with five hash functions each producing a one

digit hexadecimal representation of the data element; we use

The total of the anomalous data to the total number of all data Table 2 to clarify our point. The algorithm can be extended

inside the sketch can be calculated using (3) to give an to ten hash functions each giving a true hexadecimal value.

indication of how much of the data is non-normal, if the

computed data is greater than a preset threshold an alarm is The data will then enter the sketching algorithm which will

set at. map each hash value to the appropriate row and the column

will reflect its place in the table. Go through the data stream

Zcompute = (∑tavg) / n (1) from S 1 taking care to only increment the cell values

t1,t2,t3,far,close only when they are considered “non-normal”

using the rules that were mentioned earlier. If we want to

tavg = (t temp1 + t temp2) / 2 (2) map the hash values of the data element 38 that arrived at

time 0.5, the first thing we should do is determine what

quadrant the hash value falls under. Since it is considered a

% anomalous data = (mild +high +critical) / (normal value in the mild quadrant as we stated earlier in the text we

+mild + high+ critical) (3) increment all cell values corresponding to the H rows that

fall under the mild column. According to the first table the

The values of ttemp1 and ttemp2 t temp3 can also be used to deduce “non-normal” value 38 arrived at time 0.5 then t2’s value will

how close the “non-normal” values are to each other. Using be assigned to t 1 to denote the time of the last “non-normal”

(4) we substitute the values of ttemp1, ttemp2 to compute a value and t2’s value will be updated to 0.5 to reflect the

temporary value for the close column and subtract one from current “non-normal” arrival time.

the temporary value at the end of data insertion to calculate

its true value. Using (5) we substitute the values of t temp3, To decide whether to update the close or far column we are

ttemp2 to compute a temporary value for the far column and going to assign the value of t3 to ttemp3 which in this case is

divide the temporary value at the end of data insertion by 0.4, the value of t 2 to t temp2 which is 0.5 and the value of t 1 to

three to calculate its integer value. Once Smax is reached we ttemp1 which is 0.1 and find that ttemp2 - ttemp1 is equal to 0.4 and

check using (6) if the percentage of close values is greater ttemp3 - t temp2 equal to -0.1, so we increment the temporary

than a preset allowed maximum FC threshold if it is than an close variable.

alarm is raised.

Once all the data element’s hash values have found their way

(t temp2 – t temp1) -1 (4) into the table along with any supporting variables the table

will look like Table 3.

(t temp3 – t temp2) / 3 (5) To prevent t1 and t 2 from losing their values assign t1 to ttemp1

and t2 to ttemp2.

close / (far+ close) > FC threshold (6) Ttemp1 = 0.1 and ttemp2 = 0.5

E. Reset

Using (2) we calculate tavg to be equal to 0.2. We then add all

computed averages so far together. Once Smax is

The main concern of the count-min sketch is that as the data

volume increases the probability of collisions increases

proportionally, which could lead to misleading information.

To prevent that from happening the reset function will

overwrite all the table cells to zero if the data sketch reaches

Rmax values or the time elapsed from the system start reaches

reset timer set by the user. To understand how the algorithm

works we illustrate the pseudocode in Figure 2.

– 162 –