Page 127 - ITU KALEIDOSCOPE, ATLANTA 2019

P. 127

ICT for Health: Networks, standards and innovation

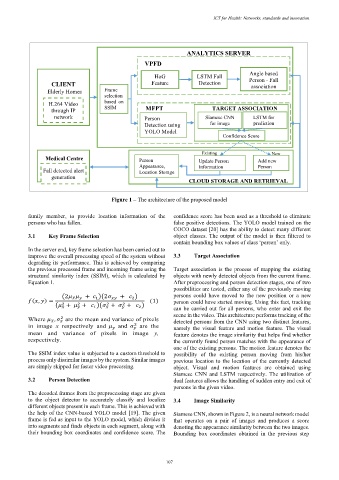

ANALYTICS SERVER

VPFD

HoG LSTM Fall Angle based

CLIENT Feature Detection Person - Fall

association

Elderly Homes Frame Extraction

selection

H.264 Video based on

SSIM

through IP MFPT TARGET ASSOCIATION

network Person Siamese CNN LSTM for

Detection using for image prediction

YOLO Model similarity

Confidence Score

Existing New

Medical Centre Person Update Person Add new

Appearance, Information Person

Fall detected alert Location Storage

generation

CLOUD STORAGE AND RETRIEVAL

Figure 1 – The architecture of the proposed model

family member, to provide location information of the confidence score has been used as a threshold to eliminate

persons who has fallen. false positive detections. The YOLO model trained on the

COCO dataset [20] has the ability to detect many different

3.1 Key Frame Selection object classes. The output of the model is then filtered to

contain bounding box values of class ‘person’ only.

In the server end, key frame selection has been carried out to

improve the overall processing speed of the system without 3.3 Target Association

degrading its performance. This is achieved by comparing

the previous processed frame and incoming frame using the Target association is the process of mapping the existing

structural similarity index (SSIM), which is calculated by objects with newly detected objects from the current frame.

Equation 1. After preprocessing and person detection stages, one of two

possibilities are tested, either any of the previously moving

(2 + )(2 + ) persons could have moved to the new position or a new

1

2

( , ) = (1) person could have started moving. Using this fact, tracking

2

2

2

2

( + + )( + + ) can be carried out for all persons, who enter and exit the

2

1

scene in the video. This architecture performs tracking of the

Where , are the mean and variance of pixels detected persons from the CNN using two distinct features,

2

in image x respectively and and are the namely the visual feature and motion feature. The visual

2

mean and variance of pixels in image y, feature denotes the image similarity that helps find whether

respectively. the currently found person matches with the appearance of

one of the existing persons. The motion feature denotes the

The SSIM index value is subjected to a custom threshold to possibility of the existing person moving from his/her

process only dissimilar images by the system. Similar images previous location to the location of the currently detected

are simply skipped for faster video processing. object. Visual and motion features are obtained using

Siamese CNN and LSTM respectively. The utilization of

3.2 Person Detection dual features allows the handling of sudden entry and exit of

persons in the given video.

The decoded frames from the preprocessing stage are given

to the object detector to accurately classify and localize 3.4 Image Similarity

different objects present in each frame. This is achieved with

the help of the CNN-based YOLO model [19]. The given Siamese CNN, shown in Figure 2, is a neural network model

frame is fed as input to the YOLO model, which divides it that operates on a pair of images and produces a score

into segments and finds objects in each segment, along with denoting the appearance similarity between the two images.

their bounding box coordinates and confidence score. The Bounding box coordinates obtained in the previous step

– 107 –