Page 105 - Kaleidoscope Academic Conference Proceedings 2024

P. 105

Innovation and Digital Transformation for a Sustainable World

perform the matrix multiplication algorithm. This involves GPU’s streaming multiprocessors(SMs). Once the matrices

a combination of arithmetic operations(e.g., Addition and are loaded into GPU memory and the matrix multiplication

Multiplication) and data movement instructions. Once the kernel is working, the kernel is launched from the CPU.

matrix multiplication is complete, the result matrix is stored This initiates parallel execution of the matrix multiplication

back into CPU registers. Depending on the application’s operation on the GPU. The number of threads per block

requirements, the results may need to be written back to and the number of blocks per grid are determined based

RAM. This involves a similar process to loading data from on the size of the matrix and the GPU’s architecture to

RAM, where the CPU’s cache hierarchy is utilized to manage achieve optimal parallelism. Each thread block is scheduled

data movement efficiently. For these operations, we use the onto an SM for execution. Within each SM, multiple

PYTHON library(numpy). thread blocks can be processed concurrently, with each

block utilizing the SM’s resources efficiently, The CUDA

runtime manages the scheduling and execution of thread

3. MATRIX MULTIPLICATION ON GPU

blocks across the GPU’s SM, maximizing parallelism and

throughput. Once the matrix multiplication is complete,

the result matrix is transferred from GPU memory back

to CPU memory using functions similar to cudaMemcpy().

Overall, performing matrix multiplication on an RTX A4000

GPU involves leveraging its massively parallel architecture,

utilizing the CUDA programming model for kernel execution,

and efficiently managing data movement between the CPU

and GPU memories. This approach allows for significant

acceleration of matrix operations compared to traditional

CPU-based computations, especially for large matrices

4. MATRIX MULTIPLICATION ON ALVEO

ACCELERATOR CARD

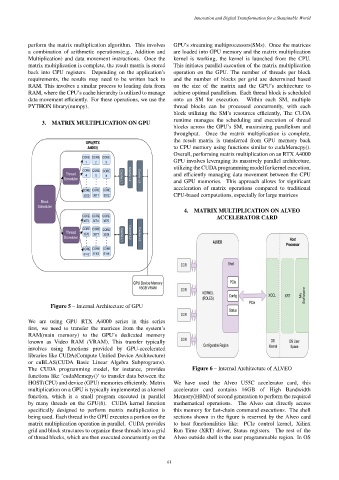

Figure 5 – Internal Architecture of GPU

We are using GPU RTX A4000 series in this series

first, we need to transfer the matrices from the system’s

RAM(main memory) to the GPU’s dedicated memory

known as Video RAM (VRAM). This transfer typically

involves using functions provided by GPU-accelerated

libraries like CUDA(Compute Unified Device Architecture)

or cuBLAS(CUDA Basic Linear Algebra Subprograms).

The CUDA programming model, for instance, provides Figure 6 – Internal Architecture of ALVEO

functions like ’cudaMemcpy()’ to transfer data between the

HOST(CPU) and device (GPU) memories efficiently. Matrix We have used the Alveo U55C accelerator card, this

multiplication on a GPU is typically implemented as a kernel accelerator card contains 16GB of High Bandwidth

function, which is a small program executed in parallel Memory(HBM) of second generation to perform the required

by many threads on the GPU(6). CUDA kernel function mathematical operations. The Alveo can directly access

specifically designed to perform matrix multiplication is this memory for fast-chain command executions. The shell

being used. Each thread in the GPU executes a portion on the sections shown in the figure is reserved by the Alveo card

matrix multiplication operation in parallel. CUDA provides to host functionalities like: PCIe control kernel, Xilinx

grid and block structures to organize these threads into a grid Run Time (XRT) driver, Status registers. The rest of the

of thread blocks, which are then executed concurrently on the Alveo outside shell is the user programmable region. In OS

– 61 –