Page 121 - ITU Journal Future and evolving technologies Volume 2 (2021), Issue 4 – AI and machine learning solutions in 5G and future networks

P. 121

ITU Journal on Future and Evolving Technologies, Volume 2 (2021), Issue 4

Table 2 – Five categories of labels for prediction

Scenario TypeNo. Type Name Description

Network Element(NE) Failure 1 Node Down Unplanned reboot of a NE

Interface Failure 3 Interface Down Cause an interface down

Interface Failure 57 Packet Loss and Delay Cause the packet loss and delay on an interface

Route Information Failure 9 BGP Injection Inject the anomaly route from another SP

Route Information Failure 11 BGP Hijack Hijack the own origin route by another SP

Fig. 5 – Visualization of a decision tree

A decision tree is a very intuitive data structure. Visual‑

izing a decision tree can give valuable insights into the

model’s learning and how domain‑relevant the learning

is. In addition, the Decision Tree is fast and performs well

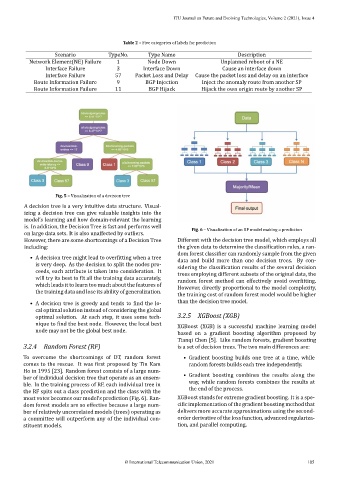

Fig. 6 – Visualization of an RF model making a prediction

on large data sets. It is also unaffected by outliers.

However, there are some shortcomings of a Decision Tree Different with the decision tree model, which employs all

including: the given data to determine the classi ication rules, a ran‑

dom forest classi ier can randomly sample from the given

• A decision tree might lead to over itting when a tree data and build more than one decision trees. By con‑

is very deep. As the decision to split the nodes pro‑ sidering the classi ication results of the several decision

ceeds, each attribute is taken into consideration. It trees employing different subsets of the original data, the

will try its best to it all the training data accurately, random forest method can effectively avoid over itting.

which leads it to learn too much about the features of However, directly proportional to the model complexity,

the training data and lose its ability of generalization.

the training cost of random forest model would be higher

• A decision tree is greedy and tends to ind the lo‑ than the decision tree model.

cal optimal solution instead of considering the global

optimal solution. At each step, it uses some tech‑ 3.2.5 XGBoost (XGB)

nique to ind the best node. However, the local best

XGBoost (XGB) is a successful machine learning model

node may not be the global best node.

based on a gradient boosting algorithm proposed by

Tianqi Chen [5]. Like random forests, gradient boosting

3.2.4 Random Forest (RF) is a set of decision trees. The two main differences are:

To overcome the shortcomings of DT , random forest • Gradient boosting builds one tree at a time, while

comes to the rescue. It was irst proposed by Tin Kam random forests builds each tree independently.

Ho in 1995 [23]. Random forest consists of a large num‑

• Gradient boosting combines the results along the

ber of individual decision tree that operate as an ensem‑

way, while random forests combines the results at

ble. In the training process of RF, each individual tree in

the end of the process.

the RF spits out a class prediction and the class with the

most votes becomes our model’s prediction (Fig. 6). Ran‑ XGBoost stands for extreme gradient boosting. It is a spe‑

dom forest models are so effective because a large num‑ ci ic implementation of the gradient boosting method that

ber of relatively uncorrelated models (trees) operating as delivers more accurate approximations using the second‑

a committee will outperform any of the individual con‑ order derivative of the loss function, advanced regulariza‑

stituent models. tion, and parallel computing.

© International Telecommunication Union, 2021 105