Page 164 - ITU-T Focus Group IMT-2020 Deliverables

P. 164

3 ITU-T Focus Group IMT-2020 Deliverables

FLARE Switch

FLARE Slice 1 (c-plane) FLARE Slice 2 (C-plane)

FLARE Slice 2 (c-plane)

docker docker docker docker

MME HSS MME HSS

SP-GW SP-GW

(c-plane) (c-plane)

PCI

Southbound Southbound

API API

Signaling

FLARE Slice 1 FLARE Slice 2

(d-plane) (d-plane)

SP-GW SP-GW

(d-plane) (d-plane)

Slicer slice

eNB Data PDN

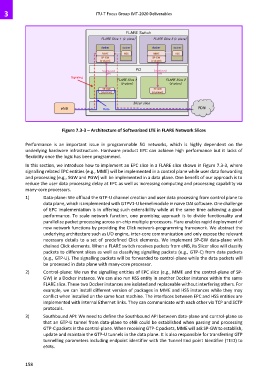

Figure 7.3-3 – Architecture of Softwarized LTE in FLARE Network Slices

Performance is an important issue in programmable 5G networks, which is highly dependent on the

underlying hardware infrastructure. Hardware product EPC can achieve high performance but it lacks of

flexibility once the logic has been programmed.

In this section, we introduce how to implement an EPC slice in a FLARE slice shown in Figure 7.3-3, where

signalling related EPC entities (e.g., MME) will be implemented in a control plane while user data forwarding

and processing (e.g., SGW and PGW) will be implemented in a data plane. One benefit of our approach is to

reduce the user data processing delay at EPC as well as increasing computing and processing capability via

many-core processors.

1) Data-plane: We offload the GTP-U channel creation and user data processing from control plane to

data plane, which is implemented with GTPV1-U kernel module in naïve OAI software. One challenge

of EPC implementation is in offering such extensibility while at the same time achieving a good

performance. To scale network function, one promising approach is to divide functionality and

parallelize packet processing across on-chip multiple processors. Flare enables rapid deployment of

new network functions by providing the Click network-programming framework. We abstract the

underlying architecture such as I/O engine, inter-core communication and only expose the relevant

necessary details to a set of predefined Click elements. We implement SP-GW data-plane with

chained Click elements. When a FLARE switch receives packets from eNB, its Slicer slice will classify

packets to different slices as well as classifying signalling packets (e.g., GTP-C) from data packets

(e.g., GTP-U). The signalling packets will be forwarded to control-plane while the data packets will

be processed in data plane with many-core processor.

2) Control-plane: We run the signalling entities of EPC slice (e.g., MME and the control-plane of SP-

GW) in a Docker instance. We can also run HSS entity in another Docker instance within the same

FLARE slice. These two Docker instances are isolated and replaceable without interfering others. For

example, we can install different version of packages in MME and HSS instances while they may

conflict when installed on the same host machine. The interfaces between EPC and HSS entities are

implemented with internal Ethernet links. They can communicate with each other via TCP and SCTP

protocols.

3) Southbound API: We need to define the Southbound API between data-plane and control-plane so

that an GTP-U tunnel from data-plane to eNB could be established when parsing and processing

GTP-C packets in the control-plane. When receiving GTP-C packets, MME will ask SP-GW to establish,

update and maintain the GTP-U tunnels in the data plane. It is also responsible for transferring GTP

tunnelling parameters including endpoint identifier with the Tunnel End point Identifier (TEID) to

eNBs.

158