Page 53 - The Annual AI Governance Report 2025 Steering the Future of AI

P. 53

The Annual AI Governance Report 2025: Steering the Future of AI

1.2 Risks

The extraordinary momentum and widespread enthusiasm for AI's potential have created a

vibrant landscape of innovation. Yet, with this rapid progress comes an equally urgent need

to understand and mitigate the accompanying dangers. The very qualities that make AI so

powerful – its speed, its scalability, its ability to act autonomously – also represent the source

of its most profound risks. Balancing this immense potential against the ever-evolving array of

harms is the challenge facing AI governance today.

As one of the speakers said, we should avoid three past mistakes

1. Misnaming AI: Calling it "Artificial Intelligence" or "agents" gives machines undue

autonomy and agency. We must continually question if machines truly think or reason,

avoiding past delusions about their capabilities.

2. Naivety about Downsides: Like with social media, we were initially blind to negative

consequences. We must avoid underestimating the potential "dark side" of AI.

3. Superficial Democratization: Just as mobile connectivity didn't automatically deliver

a digital economy to regions like Africa, we must go beyond superficial access to AI

opportunities. Deeper enabling mechanisms, like digital public infrastructure, are needed

to truly stimulate demand and distribute benefits.

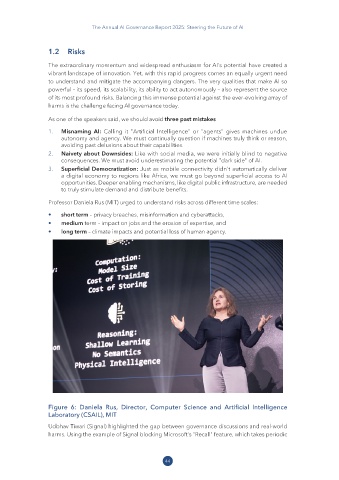

Professor Daniela Rus (MIT) urged to understand risks across different time scales:

• short term – privacy breaches, misinformation and cyberattacks,

• medium term – impact on jobs and the erosion of expertise, and

• long term – climate impacts and potential loss of human agency.

Figure 6: Daniela Rus, Director, Computer Science and Artificial Intelligence

Laboratory (CSAIL), MIT

Udbhav Tiwari (Signal) highlighted the gap between governance discussions and real-world

harms. Using the example of Signal blocking Microsoft's "Recall" feature, which takes periodic

44