Page 207 - Kaleidoscope Academic Conference Proceedings 2024

P. 207

Innovation and Digital Transformation for a Sustainable World

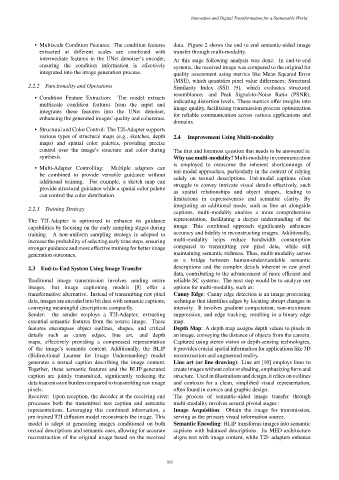

• Multiscale Condition Features: The condition features data. Figure 2 shows the end to end semantic-aided image

extracted at different scales are combined with transfer through multi-modality.

intermediate features in the UNet denoiser’s encoder, At this stage following analysis was done: In end-to-end

ensuring the condition information is effectively systems, the received image was compared to the original for

integrated into the image generation process. quality assessment using metrics like Mean Squared Error

(MSE), which quantifies pixel value differences; Structural

2.2.2 Functionality and Operations Similarity Index (SSI) [9], which evaluates structural

resemblance; and Peak Signal-to-Noise Ratio (PSNR),

• Condition Feature Extraction: The model extracts

indicating distortion levels. These metrics offer insights into

multiscale condition features from the input and

image quality, facilitating transmission process optimization

integrates these features into the UNet denoiser,

for reliable communication across various applications and

enhancing the generated images’ quality and coherence.

domains.

• Structural and Color Control: The T2I-Adapter supports

various types of structural maps (e.g., sketches, depth 2.4 Improvement Using Multi-modality

maps) and spatial color palettes, providing precise

control over the image’s structure and color during The first and foremost question that needs to be answered is:

synthesis. Why use multi-modality? Multi-modality in communication

is employed to overcome the inherent shortcomings of

• Multi-Adapter Controlling: Multiple adapters can

uni-modal approaches, particularly in the context of relying

be combined to provide versatile guidance without

solely on textual descriptions. Uni-modal captions often

additional training. For example, a sketch map can

struggle to convey intricate visual details effectively, such

provide structural guidance while a spatial color palette

as spatial relationships and object shapes, leading to

can control the color distribution.

limitations in expressiveness and semantic clarity. By

integrating an additional mode, such as line art alongside

2.2.3 Training Strategy

captions, multi-modality enables a more comprehensive

The T2I-Adapter is optimized to enhance its guidance representation, facilitating a deeper understanding of the

capabilities by focusing on the early sampling stages during image. This combined approach significantly enhances

training. A non-uniform sampling strategy is adopted to accuracy and fidelity in reconstructing images. Additionally,

increase the probability of selecting early time steps, ensuring multi-modality helps reduce bandwidth consumption

stronger guidance and more effective training for better image compared to transmitting raw pixel data, while still

generation outcomes. maintaining semantic richness. Thus, multi-modality serves

as a bridge between human-understandable semantic

2.3 End-to-End System Using Image Transfer descriptions and the complex details inherent in raw pixel

data, contributing to the advancement of more efficient and

Traditional image transmission involves sending entire reliable SC systems. The next step would be to analyze our

images, but image captioning models [8] offer a options for multi-modality, such as:

transformative alternative. Instead of transmitting raw pixel Canny Edge: Canny edge detection is an image processing

data, images are encoded into bit data with semantic captions, technique that identifies edges by locating abrupt changes in

conveying meaningful descriptions compactly. intensity. It involves gradient computation, non-maximum

Sender: the sender employs a T2I-Adapter, extracting suppression, and edge tracking, resulting in a binary edge

essential semantic features from the source image. These map.

features encompass object outlines, shapes, and critical Depth Map: A depth map assigns depth values to pixels in

details such as canny edges, line art, and depth an image, conveying the distance of objects from the camera.

maps, effectively providing a compressed representation Captured using stereo vision or depth-sensing technologies,

of the image’s semantic content. Additionally, the BLIP it provides crucial spatial information for applications like 3D

(Bidirectional Learner for Image Understanding) model reconstruction and augmented reality.

generates a textual caption describing the image content. Line art (or line drawing): Line art [10] employs lines to

Together, these semantic features and the BLIP-generated create images without color or shading, emphasizing form and

caption are jointly transmitted, significantly reducing the structure. Used in illustrations and design, it relies on outlines

data transmission burden compared to transmitting raw image and contours for a clean, simplified visual representation,

pixels. often found in comics and graphic design.

Receiver: Upon reception, the decoder at the receiving end The process of semantic-aided image transfer through

processes both the transmitted text caption and semantic multi-modality involves several pivotal stages :

representations. Leveraging this combined information, a Image Acquisition: Obtain the image for transmission,

pre-trained T2I diffusion model reconstructs the image. This serving as the primary visual information source.

model is adept at generating images conditioned on both Semantic Encoding: BLIP transforms images into semantic

textual descriptions and semantic cues, allowing for accurate captions with balanced descriptions. Its MED architecture

reconstruction of the original image based on the received aligns text with image content, while T2I- adapters enhance

– 163 –