Page 88 - Kaleidoscope Academic Conference Proceedings 2020

P. 88

2020 ITU Kaleidoscope Academic Conference

(e.g., CPU cycles), resource utilization (i.e., amount of Resource adjustment

allocated resource utilized or kept busy), and performance

latency (i.e., amount of time taken by the VNF to process a

service request and provide the response). The data is

collected at the highest frequency supported by the system, RC Query (t 1 ) Front- Records

i.e. without hampering the system performance. Data is end DB

cleaned and processed to extract the most relevant features

and their values (e.g., mean, median, maximum or minimum

values) according to the correlation coefficient of the target Resource monitoring tool

variables. The data set is split into the training and test data EU

sets. Response (t 2 )

The offline training procedure is shown in Figure 3. To train VM Docker container Server daemon

the model, we fit regression model(s) with the training data

by tuning hyper-parameters. During the training execution, Figure 5 - Experimental setup for performance evaluation

each model is ranked based on their prediction errors and

training times. This training procedure is repeated until all

test data sets have been used. After that the best model that observation data are added to the training data set, the models

gives the least prediction error is selected. are retrained online by using a copy of the most recent

training data set. The retrained models replace the old ones

3.3. Online retraining at the beginning of the next time slot. If workload variation

is significant, a small value of Z is preferred, and vice versa.

With the adoption of online retraining of the model, we can This approach of model retraining at regular intervals is

dynamically update the model to make it capable of dealing useful for tackling with the unknown nature of future

with changing workload patterns and system status. The workload variations. Note that multiple trained models can

flowchart of dynamic resource adjustment with online be used to predict the proper values of resource allocation.

retraining of regression models is shown in Figure 4. At the We can select only one model or create an ensemble of

interval of every second, the current workload is observed various models for improving the prediction accuracy.

from the system and fed to the best-trained model that

determines the amount of required resources to maintain the 4. PERFORMANCE EVALUATION RESULTS

target values of latency and utilization metrics. Accordingly,

the decision of either to increase or decrease the amount of In this section, we describe the parameter settings of the IoT-

allocated resource is executed by using control commands of DS experimental system, its setup, input workload patterns,

the container platform. In parallel, we append the currently evaluation criteria, and evaluation results.

observed workloads, performance metrics, resource

allocation and utilization data to the training data set (as the 4.1. Experimental setup and considerations

latest entry). Since a fixed training data set size ensures the

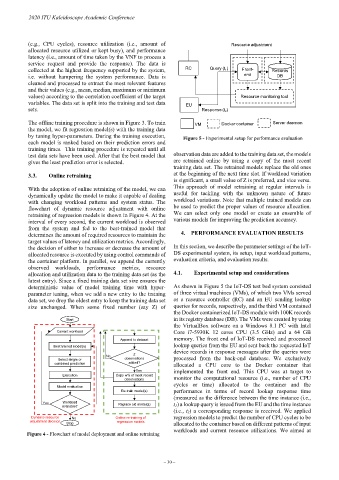

deterministic value of model training time with hyper- As shown in Figure 5 the IoT-DS test bed system consisted

parameter tuning, when we add a new entry to the training of three virtual machines (VMs), of which two VMs served

data set, we drop the oldest entry to keep the training data set as a resource controller (RC) and an EU sending lookup

size unchanged. When some fixed number (say Z) of queries for records, respectively, and the third VM contained

the Docker containerized IoT-DS module with 100K records

Start in its registry database (DB). The VMs were created by using

the VirtualBox software on a Windows 8.1 PC with Intel

Current workload Core i7-5930K 12 cores CPU (3.5 GHz) and a 64 GB

Append to dataset memory. The front end of IoT-DS received and processed

Best trained model(s) lookup queries from the EU and sent back the requested IoT

device records in response messages after the queries were

No Z

Select single or observations processed from the back-end database. We exclusively

combined prediction added? allocated a CPU core to the Docker container that

Yes implemented the front end. This CPU was at target to

Execution Copy w% of most recent

observations monitor the computational resource (i.e., number of CPU

cycles or time) allocated to the container and the

Model evaluation

Re-train model(s) performance in terms of record lookup response time

(measured as the difference between the time instance (i.e.,

Yes Workload Replace old model(s) t1) a lookup query is issued from the EU and the time instance

available?

(i.e., t2) a corresponding response is received. We applied

Dynamic resource No Online re-training of regression models to predict the number of CPU cycles to be

adjustment decision Stop regression models allocated to the container based on different patterns of input

workloads and current resource utilizations. We aimed at

Figure 4 - Flowchart of model deployment and online retraining

– 30 –