The objective of this chapter is to advise the ITU Secretary-General on potential ITU support for technical and procedural measures building confidence and security in the use of ICTs in support of the cyber-ecosystem, including its resources and assets, people, critical infrastructures and supporting technologies, taking into account the scope of ITU activity under its Constitution and Plenipotentiary 2006 Final Acts Resolution 130.

ITU-T Recommendation X.1205 defines cybersecurity as:

“the collection of tools, policies, guidelines, risk management approaches, actions, training, best practices, assurance and technologies that can be used to protect the cyber environment and organization and user’s assets. Organization and user’s assets include connected computing devices, users, applications, services, telecommunications systems, and the totality of transmitted and/or stored information in the cyber environment. Cybersecurity ensures the attainment and maintenance of the security properties of the organization and user’s assets against relevant security risks in the cyber environment. The security properties include one or more of the following: availability; integrity (which may include authenticity and non-repudiation); confidentiality”.

This definition, in its attempt for completeness, is necessarily broad and may be difficult to apply beyond general situations. ITU-D’s work on cybersecurity and critical infrastructure protection (CIP) provides a helpful framework from which to consider the specific cybersecurity and other information security and network security issues that are relevant to policy-makers addressing these challenges from a national perspective (Figure 2.1). This framework distinguishes between:

• Critical Infrastructure Protection (CIP): Identifying, assessing, and managing risks to deter or mitigate attacks and promote resiliency.

• Critical Information Infrastructure Protection (CIIP): focuses on specific IT risks to deter or mitigate attacks and promote resiliency.

Figure 2.1: Framework for national infrastructure protection

One aspect not depicted in Figure 2.1 is specific applications of software assurance. Product assurance focuses on ensuring that software, hardware and services function as intended and are free (to the greatest extent possible) from intentional and unintentional vulnerabilities. System assurance ensures that, as far as possible, protocols, software, hardware and services are free from intentional and unintentional vulnerabilities and malevolent functions.

The Common Criteria for Information Technology Security Evaluation (or Common Criteria, CC, ISO/IEC 15408) is an international standard for computer security. It provides a framework for computer system users to specify their security requirements, for vendors to implement and/or make claims about the security attributes of their products, and for evaluation laboratories to test the products to determine whether they actually meet the claims. The ISO/IEC 27000-series comprises information security standards, published jointly by the ISO and the IEC (see Section 3.6 in Chapter 3). This series provides best practice recommendations on information security management, risks and controls, within the context of an overall Information Security Management System.

2.3. Cybersecurity: Issues, Technologies & Solutions

This Section provides a summary of current issues in cybersecurity, technologies and solutions. Additional information can be found in related sources, including the GCA brochure.

2.3.1. The Growing Importance of Cybersecurity

As an increasing number of artifacts and processes become digitized and are accessible through a variety of networked devices, the risks posed by cyberthreats continue to grow in importance. Digital assets and processes have displaced older paper-based processes and are involved in global data flows, traversing diverse networks and devices with varying levels of protection. These networks and devices adhere to different sets of rules and may be located in different regulatory environments. With the growing portability of devices and emergence of multi-network communications and data services, user access to digital data and services is growing exponentially.

In today’s global economy, information flows internationally across borders, and is processed in multiple localities by many businesses, as part of everyday commercial transactions, creating jurisdictional and other issues involving the applicability of local privacy and information security laws.

There has been an explosion in new technologies and devices, many of which are portable and based on wireless technologies – the proliferation of devices is also increasing the raw amount of data being transferred, downloaded, processed, stored and exchanged. For example, portable devices can change the physical location of information (by carrying a USB key or the use of a portable media player). These kinds of transfers are hard to intercept or regulate through communications-oriented policies or protocols. Data is also being combined in unexpected ways to produce new information, which itself is vulnerable.

These trends can help boost economic growth, but they create numerous security and privacy concerns, including:

• How can an acceptable level of security be established to build consumer trust in the digital economy?

• How can successful security and risk management practices be identified and promoted for critical infrastructure protection?

• What can the ITU do to promote common approaches, without advocating regulatory regimes that may restrict the flexibility of governments and critical infrastructure owners and operators?

• How can the ITU help create a favorable environment for continued growth of the Internet economy by encouraging continuing investment and new business development?

Although new technologies for protecting networks, devices, and applications are being developed at an ever-faster pace, threats and vulnerabilities in dynamic multinational computing environments are growing even more quickly, driven by the ubiquitous nature and diversity of today’s communications and computing technologies.

Formerly, it was sufficient to protect network perimeters and computing devices within an enterprise to preserve the integrity of a trusted network. Now, however, the network perimeters are becoming fuzzier and more difficult to protect. Many mobile (and sometimes virtual) devices can be used both inside and outside of the trusted area, multiple interconnected networks are often in operation with different levels of security, and numerous classes of users may be authorized inside and outside of the trusted zone. This disappearance of traditional perimeters has boosted efficiency, flexibility and innovation. However, promoting the security and resiliency of the ICTs we now depend on is a highly complex challenge.

Cybercrime is now highly profitable. Criminals are investing substantial sums of money into researching and funding new types of attacks, while vendors and CI providers continually strengthen their products, systems, and services. Attackers are now targeting software applications that were previously never targeted. Today’s stealthy targeted attacks, malware, viruses and worms can spread from networked printers to other printers or intelligent (read or modifiable) devices and can now target next-generation devices, such as smart phones, which may be connected to the Internet.[1]

Absolute or perfect technical security is impossible. A key objective is to improve risk management practices for ICT-supported functions across government and critical infrastructure, whilst also increasing resiliency and the ability to withstand attacks. Resiliency does not equate to complete risk elimination, however. The ultimate goal is to reduce risks to acceptable levels, based on improved technology and processes and to make security measures as efficient and cost-effective as possible. Realizing this goal needs flexible processes and procedures, which can be adapted to an increasingly dynamic threat environment.

2.3.2. Ongoing Efforts to Promote Cybersecurity and CIP

ITU is currently carrying out vital work in cybersecurity and CIP in both the ITU-D and ITU-T. For example, ITU-T Study Group 13 is leading a large-scale initiative on standards for Next Generation Networks (NGN) and serves as the global forum for regional NGN work occurring in other bodies (such as ETSI TISPAN, 3GPP/CommonIMS, ATIS, CableLabs, IETF, etc). NGN is built on Internet Protocol (IP), offering a rich variety of converged services over fixed and mobile networks and/or technologies with generalized mobility. Security is one of the defining features of NGN. SG 13 NGN security studies are developing network architectures that provide for:

• maximal network and end-user resource protection;

• support for co-existence of multiple networking technologies;

• end-to-end security mechanisms;

• security solutions that apply over multiple administrative domains;

- secure identity management – for example, in security solutions (e.g. content/service/network/terminal protection) for IPTV that are cost-effective and have acceptable impact on the performance, quality of service, usability, and scalability.

Another major development in ITU-T is identity management (IdM). A broad range of IdM issues of concern to telecommunication network/service providers, governments and end-users are being addressed, including assertion and assurance of entity identities (e.g. user, device, service providers) noted in the following, non-exhaustive list:

• support of subscriber services (e.g. NGN services and applications) using common IdM infrastructure to support multiple applications including inter-network communications;

• secure provisioning of network devices;

• ease of use and single sign-on / sign-off;

• public safety services, international emergency and priority services;

• electronic government (e-government) services;

• privacy/user control of personal information;

• security (e.g. confidence of transactions, protection from identity theft) and protection of NGN infrastructure, resources (services and applications) and end-user information;

ITU’s non-binding recommendations and collaborative structure provide an excellent environment for cooperation. Although cybersecurity and CIP issues benefit from standardization efforts, they cannot be resolved through standards alone.

2.3.3. Means of Protection in Today’s Complex Environment

The cyber-ecosystem is characterized by the extreme complexity and diversity of today’s computing environment. Open Source (OS), applications, devices and networks all have different types of vulnerabilities and use different means of protection. Within the elements of a computing environment, common applications contain inherent protection mechanisms designed to enhance their security (e.g., email). There is a substantial literature on the risks associated with various components of modern computing environments and the different remedies available (for example, the notion of Layered Defense is common within the industry). For systems providing end-to-end communications, ITU-T Recommendation X.805[2] provides a comprehensive and systematic reference model based on three security layers (Applications, Services, Infrastructure), three security planes (End-user, Control, Management), and eight security dimensions (Access control, Authentication, Non-repudiation, Data confidentiality, Communication security, data integrity, Availability, Privacy).

Over time, tools and mechanisms to protect networks, servers and clients have been introduced for all categories of users, and many have become common. While organizational networks are typically protected by firewalls and intrusion detection or prevention tools, the use of further safeguards such as extrusion detection is increasing. Access control is increasingly enforced and audited. Secure networking protocols comprising authentication and traffic encryption are used, protecting the integrity and confidentiality of data in transit.

For servers, physical separation, 24-hour monitoring and strong configuration management support generic protection technologies, such as encryption for stored data at rest, access control, or anti-virus tools. For client devices, various measures have become standard to protect against software attacks. Personal firewalls, anti-virus and anti-spyware tools are becoming pervasive, whilst more secure and regularly patched operating systems and applications have been developed, using security- and privacy-conscious design approaches to help solve many security problems. The security of accounts has advanced considerably, through more widespread identity management and stricter access control, and the security of data at rest is more visible and enjoys rapidly growing adoption. Innovative methods of building a safer computing environment (such as trusted computing) are being adopted by the mass market.

Despite considerable success in ensuring greater security, current methods of protection for networks, computers and data are insufficient. The widespread use of exclusionary models of protection (for example, firewalls, intrusion detection tools, access control and similar methods) is now less effective, given the growth of highly mobile networked devices. The problem with exclusionary models is that they require computationally feasible ways to distinguish what needs to be excluded from what is legitimate. This distinction is increasingly blurred, and often cannot now be resolved without constant input from users. The balance of usability and security limits what can be achieved through exclusionary models. Further, the diversity of operations spanning multiple networks and thousands of systems (accessed by millions of users) makes it impossible to analyze all the elements in need of protection, while the mobility of devices containing confidential information makes them targets for attack.

Effective cybersecurity measures are not limited to detection and remediation technologies, but need to cover a comprehensive set of components and techniques, ranging from diverse networks and infrastructure (including critical infrastructure) to servers, clients, applications, and services, users and personnel. Successful approaches include best practices for operations, measurements and provisioning, use of proven secure architectures, reliable incident reporting, sound remediation plans, consistent policies for AAA (Authentication, Authorization and Audit) and other indispensable activities.

Whilst technological solutions and procedures (such as those above) can provide a foundation for improved cybersecurity, no solution is complete without adequate training and education for those making use of modern technological systems. Malicious software and miscreants wishing to gain unauthorized access to data and information have rapidly shifted tactics to embrace “social engineering” as a key method for evading many defense strategies. With regular education and awareness campaigns to explain new tactics and teach IT users how to address security more critically, it is possible to begin to address threats to cybersecurity and other information security and network security issues.

2.3.4. Servers, Clients, Diverse Networks

In theory, only authorized users and applications can access protected systems and networks, and they are allowed to perform only the functions permitted for the types of accounts that they hold. In reality, the picture is not so clear-cut. The notion of a perimeter separating protected (trusted) organizational networks from public networks is disappearing.

Other key trends in security must also be taken into account in the development of successful future approaches to end-to-end security:

1. Externalization of security components: Many organizations have built extensive security infrastructures (including PKIs, firewalls, intrusion detection systems and identity management systems). Using such safeguards to enable security in software and sometimes hardware applications is now widespread.

2. Centralization of security functions is another consequence of the emergence of organizational security infrastructures.

3. Use of open standards and increasing reliance on self-certification: Open standards are commonly used in security systems to ensure higher levels of interoperability, but diversity of implementation makes the value of a certification review (or self-certification based on a shared framework) much greater.

It is difficult to guard against the misuse of legitimate and common security technologies. Legitimate technologies (such as data encryption and VPN) may be used to carry out illegal activities. In order to enhance security for all, it is vital to define mechanisms that reduce illegal uses of security technologies, without impeding innovation and growth in legitimate applications.

For example, encryption is a fundamental component of effective security for information, including personal data. As with any technology, encryption can be used for either good or bad purposes. Effective enforcement of laws (e.g. against hacking, identity theft, etc.) is vital in establishing trust in individuals’ use of technology. However, law enforcement should not regulate the technology itself or it could potentially chill innovation.

2.3.5. Diverse Environments and Levels of Protection

Not all components of a communications environment enjoy the same level of protection. In some cases, lighter protection is applied to certain components, as a legacy from older technology, where sensitive information and important access points were located away from certain elements (such as client PCs and ultra-mobile devices). This situation has changed over the last decade.

PCs can store huge amounts of sensitive information, and consequently many attacks are now directed at networked clients. With greater computing power and practically unlimited storage capacity, modern PCs are repositories of highly confidential information, aggregating data from multiple sources. A breach aimed at a PC may be the equivalent of a breach affecting several business-critical servers. However, PCs are lightly protected compared to servers and networks (Figure 2.2 below).

Ultra-mobile devices (such as PDAs and smart phones) are increasingly involved in day-to-day business operations and are often used to transfer or store sensitive information. If lost or stolen, they can compromise entire organizational networks. Ultra-mobile devices are increasingly used in sensitive professional environments (e.g. healthcare or finance), where security is essential. Consistent protection of these devices is still in its infancy and needs urgent attention.

Figure 2.2: Client Protection versus Server and Network Protection

In addition to different levels of assurance among the elements of a modern computing environment, there is often no homogeneity inside these elements, with different networks offering varying levels of protection. For example, Wi-Fi (IEEE 802.11x) gradually acquired security features (such as authentication and traffic encryption) that protected data integrity and confidentiality between access devices and the access point. Subsequent technological developments (such as WiMAX) use the same approach as in Wi-Fi to define earlier security models in the standard development cycle. However, in the case of sensor networks, for example, security approaches are still immature and not yet ready for commercial implementation. As network traffic becomes increasingly integrated, defining security solutions applicable to all networks and to NGN continues to be a priority.

Protection of servers varies depending on their function and cannot be defined across the board. It is premature to discuss general levels of protection of client machines, where many of the security features are still optional and depend on the expertise of owners and end-users.

Today’s computing environment is global, with data flows traversing many geographies, and users accessing networks and application from virtually anywhere. Security requirements vary widely in different segments of global computing environments, ranging from acceptable (but not impregnable) to environments where security is frequently overlooked.

There are significant differences in the levels of security between organizational and consumer networks and computing environments spread across different geographies. However, public and enterprise networks frequently interact and often use the same devices for access, so such separation is increasingly symbolic. The need to produce a safer environment for consumers using the Internet is now crucial.

Even within the same geography, and among similar organizations, levels of protections are often not comparable. For example, higher education institutions in the same country offer vastly different levels of assurance in their computing environments.

While much attention has been paid to the development of security technologies, some components have been overlooked until recently. Standardization is a good indicator of the level of maturity of a technology, and the recent emergence of new standards in key areas (e.g., in encryption for data at rest and node encryption for organizational networks in IEEE) underlines the vital importance of standards.

Although cybersecurity has always been an issue for national security and treated differently in different countries, common approaches are supported by commonly recognized standards. However, local approaches are still strong, especially in encryption. This can hinder both the interoperability and security of systems, since the robustness of an untested cipher cannot be guaranteed and interoperability is difficult, if ciphers have not been published. Since encryption is a foundation of security and privacy, shared approaches to encryption at an international level are very important.

2.3.6. Nature of Attacks

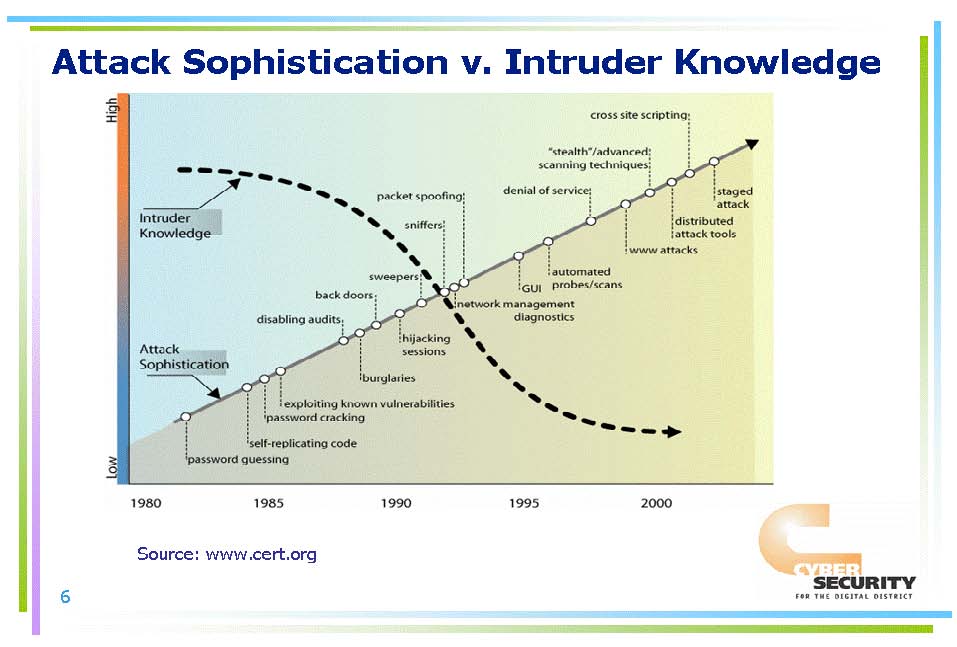

Whilst the computing environment has become more diverse and globally connected, the sophistication of cyber-attacks has continued to grow. Although the sophistication of attacks grows ahead of available protection technologies, the knowledge needed by an attacker to commit a successful security breach continues to decrease, as illustrated in Figure 2.3 below. The proliferation of easy-to-use hacking tools makes it possible for even inexperienced attackers to cause significant damage.

Figure 2.3: Sophistication of Attacks versus Attackers’ Knowledge , Source:

www.cert.org

, Source:

www.cert.org

Other key trends in attacks are also evident. First, attack vectors are now moving up and down the stack. There is increased targeting of firmware, as well as targeting of the application layer. Attackers no longer focus mainly on the OS. This expansion means that technology developers that used to be detached from security issues now need to focus directly on security and providing acceptable levels of assurance. All components in the stack need to be developed with security in mind, weighing agility and usability against security risks.

Security solutions now need multiple layers of security to protect the overall platform. No single technology provider can solve the end-user trust issue; technologists in areas spanning from firmware to networks and client-side applications need to work together to ensure trustworthy computing.

The monetization of cybercrime means that hackers can make substantial sums of money from exploit code, botnets and data theft. This criminal economy drives proliferation and innovation in threats. Economic incentives for security breaches need to be reduced to create an environment where cybersecurity breaches are less profitable for perpetrators.

2.3.7. Categories of Risk

Since today’s computing environment is so complex, it is helpful to consider technology trends within a simplified framework. Several threat categorizations have been developed. ITU-T X.800[3] classifies threats to data communication systems, based on the following categories:

1. Destruction of information and/or other resources;

2. Corruption or modification of information;

3. Theft, removal or loss of information and/or other resources;

4. Disclosure of information; and

5. Interruption of services.

US CERT has defined six categories of security incidents that provide a good tool to link security incidents to technology issues. This categorization can be used to describe the major risk categories in cybersecurity and outline available remedies:

Figure 2.4: A Simplified framework for categorizing threats

ITU-T Study Group 17 Question 7 outlines a categorization scheme for the analysis of various security risks, based on six categories. Technological solutions are available to mitigate damage arising from incidents in the first five categories (and potentially, the sixth):

1. Category 1 – Unauthorized access

This category is dedicated to events associated with unauthorized access where an individual gains access to networks, applications, or services that he/she is not authorized to use.

For unauthorized access (Category 1), stronger and/or dynamic passwords, strong (multi-factor) authentication, protected storage of artifacts associated with access to systems, and auditing can help improve the situation. When device authentication is combined with user authentication, the results are even more positive. For certain applications (including online banking and government/citizen interactions), stronger access control is now the norm. As identity management and multi-factor authentication technologies are combined with protected storage of credentials, security is likely to improve in other areas as well.

2) Category 2 - Denial of Service (DoS) Attacks

This category describes DoS attacks, when the normal functioning of networks and applications is impeded because they are flooded with automatically generated requests. Such attacks can be stopped or reduced, if service providers use configurations that stop repeated requests to systems (including Domain Name Systems or DNS servers). Configurations can also be adapted to recognize and block infected machines relaying repeated requests. Protection and hardening of clients against unauthorized access and use in DoS attacks can reduce the risks associated with DoS considerably.

Security needs for DNS encompass all name systems, including notably, the ITU-T’s own name system, in addition to the IETF DNS. This is significant because the IETF DNS namespace is not within the ITU’s remit, but the ITU-T own namespaces are, and the ITU needs to attend to the cybersecurity of those important namespaces. The ITU OID name system in particular is important in helping promote cybersecurity.

Security needs today exist mainly at the lower layers of systems and not at the roots. It is unclear which privacy requirements exist within DNS support infrastructures. Privacy requirements do not however include:

1) The actual query-response servers exchanging routing information; or

2) root information encompassing only government agencies, institutions or commercial companies, which do not enjoy privacy rights.

3) Category 3 – Malicious Code

This category includes malicious code, when malicious code (a bot, a Trojan, a virus, or spyware) infects or affects OS or the applications. Regularly updated anti-virus and anti-spyware systems can help protect against infection by generic malicious code. Unfortunately, hackers using malicious code are starting to use malicious code customized for the environment where it operates. As a result, new approaches are needed, such as hardware systems that are sensitive to unauthorized changes in configurations. New hardware security solutions (along with best practices such as the auditing and monitoring of both incoming and outgoing packets) can significantly reduce damage from customized malicious code. Systems serving as distribution channels for malicious code need to be identified and removed from the network.

End-point security software continues to evolve to provide better protection. For example, point solutions for anti-virus, anti-spyware and personal firewalls are now being integrated into ‘endpoint security software’. Host Intrusion Prevention (HIPS) technology, data leakage prevention and application control features are also being integrated. Technologies such as HIPS are very powerful in enforcing correct protocol and application behavior, which is especially useful in combating new zero-day application attacks. HIPS can also provide virtual patches to shield known vulnerabilities. This is vital in ‘hard to patch’ environments - for example, where critical servers need extensive testing before deploying a patch, or servers that cannot be rebooted easily, or in cases where no patch exists yet.

4) Category 4 - Improper Use of Systems

This category describes cases where users violate acceptable usage policies. Training, monitoring, and auditing are vital for combating improper use incidents under Category 4. Technology can be used to partly mitigate risks from improper usage of systems (e.g. better user interfaces and security-conscious system architecture), but only administrative control measures and consistent application of preventive processes and procedures can fully mitigate this risk.

5) Category 5 – Unauthorized Access and Exploitation

This category is the largest category of attack (scans, probes and attempted access), where unauthorized hackers try to collect and exploit information on and identify computers, services, open ports, and protocols. For such events, effective firewall masking systems and ports, intrusion detection systems, constant system auditing and monitoring can help protect environments. Organizations can use internal network topology providing additional risk mitigation for servers and clients. For consumer client systems, platform vendors and ISPs must ensure that only edge systems complying with minimal levels of acceptable security (including up-to-date patches, personal firewalls and anti-virus systems) can access full Internet services. Networks are porous, and everything is open to the Internet. Instead of relying solely on perimeter protection for networks, the same perimeter protection approach needs to be applied to host computers as well, as another layer of defense.

6) Category 6 – Other Unconfirmed Incidents

The last category is dedicated to unconfirmed incidents needing further investigation to determine whether they are malicious in nature. Technological solutions are available to mitigate potential damage arising from incidents in the first five categories and potentially, the sixth, if an incident is proven to be an attack.

Although the remedies listed above represent an incomplete list of measures needed for effective remediation, the ITU-T SG 17 framework provides a useful framework for risk analysis.

2.3.8. Reasonable Use of Cryptography

The use of encryption technologies is pervasive in commercial off-the-shelf software products, particularly common software applications (e.g., web browsers and email programs). Cryptography is a vital component driving many of the security technologies described above. From accessing email and premium content to protecting network traffic and critical assets, from accessing bank accounts to making travel arrangements, encryption is pervasive and omnipresent.

The mass deployment of new technologies has massively multiplied the amount of digital data transferred and stored, as well as the need for encryption-based security technologies. As components of security applications move from software and networks to hardware, encryption technologies must also evolve. Some security risks can be mitigated through robust, peer-reviewed public encryption ciphers and internationally inter-operable cryptography standards.

2.3.9. Security and Privacy

Building secure systems is impossible without taking privacy concerns into account. In today’s era of interconnected and interoperable networks, information transmitted in basic functions can severely compromise users’ privacy and needs to be protected and used on a “need to know” basis. Data processing, analysis, and monitoring services must be designed with the users’ privacy in mind.

Another key development with strong implications for user privacy and network security is the proliferation of inexpensive and highly mobile storage devices. Such devices (e.g., flash drives) are often unprotected, but frequently used to store confidential or sensitive data. Other mobile devices (e.g., smart phones and PDAs) are also a treasure trove of important data, but continue to be relatively unprotected.

Privacy-conscious technology solutions should be used consistently in building secure environments, allowing the owners of information to control its release and actively select privacy-friendly options. Without prioritizing privacy, users’ trust in the digital economy cannot be preserved. User awareness and education are all vital parts of successful security solutions.

2.3.10. Incident Response

The growing sophistication of attacks, combined with new, easy–to-use hacking tools, raises the risk of serious damage resulting from a cyber-attack. Governments and enterprises alike must prepare for inevitable emergencies, whether unintentional or malicious. Key to this preparation is the establishment of emergency incident response capability.

Computer Security Incident Response Teams (CSIRTs) or a Computer Emergency Response Teams (CERTs) respond to computer security incidents to try to resolve them and prevent computer security incidents within their constituency or responsibility. CSIRTs can be formed to serve government agencies, vendors and/or commercial enterprises.

Incident response capability must perform three essential functions:

1. Build trusted relationships with constituents, so the team can establish effective processes for working with its partners to monitor, identify and analyze attacks and vulnerabilities;

2. Work with vendors to disseminate cyber-threat warning information to constituents rapidly; and

3. Develop and exercise incident response capabilities (in cooperation with other organizations) that enable the CSIRT/CERT to assist constituents throughout the attack, from detection to recovery.

For maximum impact, the establishment of a national CSIRT/CERT is strongly recommended. As infrastructures become more interconnected and dependent, a central point of contact and coordination for different organizations and sectors within a country is vital. When creating a national CSIRT/CERT, its roles and responsibilities should be clearly defined. Enterprises, vendors, and other government entities need to understand the authority and organization of the national CSIRT/CERT. With a clear structure of regional and national capabilities, CSIRTs/CERTs can coordinate emergency response for both cyber and physical events. The national CSIRT/CERT can also disseminate threat information and best practices to improve incident response capacity.

Large-scale cyber-incidents affect both the private and public sector. Technology vendors also have an important role to play. Large firms and utilities may benefit from a specialized internal CSIRT/CERT that can respond to specific threats to the organization. All entities should cooperate in the preparation of incident monitoring and response, testing of communication channels, and regularly revising response strategies.

Effective incident response needs government, vendors and enterprises to work together to assess, mitigate and recover from cyber-attacks. Systematic incident response enables constituents to recover quickly, with minimum damage or disruption to critical services.

There are several highly successful joint forums for CSIRTs/CERTs. CSIRTs should join existing global or regional initiatives. There are different standards for CSIRTs’ interactions. Incident handlers should ensure that they have the capability to communicate securely and confidentially with their constituency and with other CSIRTs.

Well-known challenges to incident response include:

• Detection-response systems may not be well-known or widespread or lack capacity;

• The broader telecom world has no equivalent to FIRST;

• Incident detection and diagnosis may not proceed to the next stages of analysis and corrective measures.

2.3.11. Responsible Disclosure[4]

Technology vendors and security researchers share the common goal of customer safety. Every technology contains flaws and vulnerabilities; true leadership lies in responsible disclosure, investigation and remediation of vulnerabilities.

Technology vendors investigate reports of security vulnerabilities in their products, analyze the risks to customers and distribute fixes where necessary, in a timely manner. They often depend on the cooperation of people who discover security vulnerabilities. Security professionals have a duty to notify vendors and give them an opportunity to address vulnerabilities in their products, before publicly disclosing the vulnerability. Given the rapid evolution in mass-deployed technologies, it is not possible to produce security patches overnight. Giving advance notice of vulnerabilities to product vendors ensures that the highest quality patch can be produced, while not exposing customers to malicious attacks. This gives vendors the chance to produce well-tested patches that addresses the vulnerabilities in question.

The application of security patches to both home and enterprise systems is vital. Given customer concerns, it is essential that patches are delivered in a consistent, predictable way. Complete security patches should address all additional issues found during the investigation of the vulnerability. Responsible disclosure by security researchers allows vendors to meet the needs of customers by creating the most effective patches, with minimum side-effects.

However, full disclosure of vulnerability details (e.g. on public mailing lists or websites) can raise customer anxiety. Such reports can force vendors to put out rushed solutions and security updates that customers can use to guard against the reported vulnerability. However, to release updates rapidly, shortcuts may be made in the development process. Shortcuts can boost the risk that a fix is ineffective or does not resolve vulnerabilities in surrounding code. Vendors only take these shortcuts because they have to, knowing that once vulnerability details are published, hackers can exploit them rapidly. So, while updates may be released very rapidly (often a key argument in favor of full disclosure) there are significant costs in terms of security coverage and quality.

There are exceptions to full disclosure and responsible disclosure, such as broad zero-day attacks. In those cases, only rapid cooperation between multiple vendors, researchers and the security community can provide effective mitigation and resolutions of the threat quickly.

Protection of computing environments or cyber-ecosystems, and the customers that depend on them, demands responsible disclosure of vulnerabilities by security researchers. Technology vendors can help accomplish this by maintaining open communication channels, treating researchers with respect, and engaging in mutual listening and learning. The cooperation of the security community to ensure that security vulnerabilities are responsibly disclosed and addressed is vital for producing high-quality, comprehensive patches.

2.3.12. Assurance and Business Models

Today’s computing environment is complex and assurance mechanisms and certification should be developed, where appropriate. No certification scheme is perfect and applicable to all security situations. A variety of approaches, ranging from self-certification to third party evaluation, should be adopted to support the wide range of existing systems and applications available. Security assurance (or the process by which computer systems, hardware and software are certified as secure) is vital for addressing vulnerabilities, as well as being important in critical infrastructure protection.

2.3.13. Common Criteria

In response to the growing sophistication of technologies and globalization in the market for IT products, a group of nations joined forces to design a security evaluation process, known as the Common Criteria for Information Technology Security Evaluation (commonly referred to as the Common Criteria or CC). The CC are defined and maintained by an international body composed of nations that recognize CC evaluations and are recognized by the International Organization for Standardization (ISO) and International Electrotechnical Commission (IEC) as ISO/IEC International Standard 15408.

Under the CC, classes of products (such as operating systems, routers, firewalls, antivirus, etc.) are evaluated against the security functional and assurance requirements of protection profiles. Protection profiles have been developed for operating systems, firewalls, smart cards, and other products. A higher EAL certification specifies a higher level of confidence that a product’s security functions will be performed correctly and effectively.

CC certification provides specified levels of quality assurance and allows customers to apply consistent, stringent and independently verified evaluation requirements to their IT purchases. Although CC certification does not guarantee that a product is free of security vulnerabilities, it does provide a greater level of security assurance that the product performs as documented and that the vendor supports the product, with processes to address any flaws that may be discovered.

The CC program provides customers with substantial information that can enable higher security in their implementation of evaluated products. Although CC certification is one of many different factors that can contribute to providing effective security, vendors that have embraced the CC can help customers build more secure IT systems.

CC can help customers make informed security decisions:

• Customers can compare their specific requirements against the CC’s standards to determine the level of security they require;

• Customers can decide more easily whether products meet their security requirements. As the CC require certification bodies to report on the security features of evaluated products, consumers can use these reports to judge the relative security of competing IT products.

• Customers can depend on CC evaluations, because they are performed by independent testing labs.

• Since the CC is an international standard, it provides a common set of standards that multinational companies can use to choose products that meet the security needs of local operations.

The CC are based on mutual recognition. Products evaluated in approved labs are accepted by governments who are signatories to the CC agreement. However, the CC certification system has only been adopted by a limited number of countries and, in an increasingly interconnected world, CC membership may need to be expanded. CC has been criticized as being slow and costly. It has been suggested that they may impede government access to the latest technologies.

As Internet technologies mature, business models will place a premium on ensuring minimum levels of security for networks and devices. Similar to car or home insurance, where owners are held responsible for some events and are compensated for incidents beyond their control, organizations and businesses will be responsible for maintaining a reasonably secure environment and configurations for their connected devices. This can only happen when technologies that make security transparent and painless to all users, including those that do not have specialized technical knowledge, become state-of-the-art for all systems, devices, and applications.

2.3.14. A Lifecycle Approach to Security

Adopting a lifecycle approach can improve security for governments, firms and vendors alike. Different methodologies exist, but they all share some common elements. In February 2007, the Ssoftware Assurance Forum for Excellence in Code (www.SAFECode.org)[5], a non-profit organization established by experts to share best practices about secure development, released a paper identifying the key elements of secure software development. The seven phases are highlighted in Appendix 2 and outline a lifecycle from concept through to maintenance, including incident response and sustained engineering. Another model that can be used is the Plan-Do-Check-Act (PDCA) model adopted in ISO 9001 (QMS) and ISO 14001 (EMS).[6]

2.4. Technical and Procedural Measures of Cybersecurity

This section briefly summarizes key technical and procedural measures for cybersecurity to illustrate the scope of potential solutions. An exhaustive overview of measures can be found in the cybersecurity-related standards and frameworks listed in Section 2.6 (References).

2.4.1. Overview of Measures

Technical and procedural measures for cybersecurity are best addressed as part of a comprehensive and coordinated security initiative or program that includes, but is not restricted, to:

• Infrastructure;

• Organization;

• Personnel;

• Software;

• Device and hardware security;

• Communications;

• Continuity and recovery;

• Data protection;

• Cybersecurity-related standards and frameworks;

• Standards-making activities; and

• Industry collaboration.

The following sub-sections discuss some of these measures in greater detail.

2.4.2. Measures that enable protection

Measures that enable protection should observe the following principles:

• Resilient infrastructure – network infrastructure, terminal devices, and applications should be able to function under all intentional and unintentional threats.

• Network/application integrity – networks, terminal devices and applications should perform as expected, including maintenance and testing that ascertain their integrity.

• Transport security (eg VPN) – secure and trusted network transport paths should be maintained.

• Encryption for data at rest – secure and trusted information should be maintained.

• Digital identity management – IdM provides the ability to trust known assurances and assertions by entities (person, organization, object, or process) of their credentials, identifiers and attributes, especially through common identity models and planes; common protocols for access to those trusted credentials; proper identity maintenance with known assurance levels, including with identity management federation interoperability capabilities.

• Routing and resource constraints enable access to be denied and the availability of network or application resources for communication or signaling to be controlled.

• Data retention and auditing can help ensure that data on network-based actions are available.

• Real-time data availability - accurate and granular data should be available in real-time.

• Corrective mechanisms should be available to adjust any of the above principles to correct vulnerabilities discovered through forensic analysis, based on retained or real-time data.

2.4.3. Measures that enable threat detection

Important measures for threat detection include, but are not restricted to:

• Forensic analysis – reveals current or potential threats and provides the basis for subsequent investigation.

• Intrusion detection tools – detect actions that attempt to compromise the confidentiality, integrity or availability of a resource.

2.4.4. Measures that thwart cybercrime

Measures that can help thwart cybercrime include, but are not limited to:

• Establishing comprehensive information security programs that promote policies and the management, technical and operational controls necessary for security compliance.

• Risk evaluation and risk management – detailing the status of system security at any time is a good starting point.

• Common tools such as firewalls or protective network topology.

• Protective mechanisms in edge detection.

• Configuration management, including measures for establishing and maintaining settings or configurations for computer systems.

• Investigatory measures that can be used to create reputation sanctions.

• Blacklist/Whitelist measures - lists that can be used to deny or enable resources, in conjunction with routing and resource constraints or digital identity management.

• Legal Measures (Chapter 1) and other law enforcement, that may be pursued as a result of investigatory measures.

2.4.5. Measures that promote business continuity

Measures that promote business continuity include, but are not restricted to:

• Protection using classification to choose security protection measures;

• Contingency and recovery planning to develop foresight on how an organization will deal with potential disasters.

• Incident Handling / Emergency response.

• Systems and data back-up and restoration - providing backup measures for critical systems, components, devices and data to ensure recovery following an incident.

• Redundant facilities provide additional levels of assurance for critical systems, components, devices and data.

There is no “silver bullet” for cybersecurity – no single initiative or framework can solve all problems in such a complex field. A number of frameworks and standards exist, which present exhaustive material on activities associated with building confidence and security in the use of ICTs. To avoid unnecessary duplication, HLEG’s proposals presented in Annex 1 to this book focus on ITU’s role as a facilitator in suggesting and opening out these standards and frameworks to as many countries and companies as possible.

In terms of ITU’s role, ITU can work with existing external centers of expertise to identify, promote and foster adoption of enhanced security procedures and technical measures. It could become the global “centre of excellence” for the collection and distribution of timely telecommunications/ICT cybersecurity-related information – including a publicly-available institutional ecosystem of sources - necessary to enhance cybersecurity capabilities worldwide.

ITU could collaborate with organizations, vendors, and other appropriate subject matter experts to (1) advance incident response as a discipline worldwide, (2) promote and support possibilities for CSIRTs to join the existing global and regional conferences and forums, in order to build capacity for improving the state of the art in incident response on regional basis, and (3) collaborate on the development of materials for establishing national CSIRTs and for effectively communicating with the CSIRT authorities.

Proposals for draft strategies in the field of technical measures are presented in Annex 1 to this book. They build upon the important work that has been done by the ITU on the development of best practices and standards for cybersecurity. With regard to standards that are developed by other standardization organizations, the ITU could act as a facilitator in promoting collaboration between different standardization organizations with a view to ensuring that new standards are developed in accordance with the principles of openness, interoperability and non-discrimination.

This Strategic Report is based on the following references:

• ITU-T ICT Security Standards Roadmap, developed by ITU-T in collaboration with ENISA and NISSG, available at: http://www.itu.int/ITU-T/studygroups/com17/ict/index.html).

• ITU-D Question 22/1, see: http://www.itu.int/ITU-D/cyb/cybersecurity/docs/itu-draft-cybersecurity-framework.pdf.

• ITU-T work in Study Group 17, see: http://www.itu.int/ITU-T/studygroups/com17/index.asp

• ITU-T Lead Study Group on Telecommunication Security at http://www.itu.int/ITU-T/studygroups/com17/tel-security.html.

• ITU-T Security Compendium including a “Catalogue of approved ITU-T Recommendations related to Telecommunication Security” available at: http://www.itu.int/ITU-T/studygroups/com17/cat005.doc

• “Extract of ITU-T approved security definitions” available at http://www.itu.int/ITU-T/studygroups/com17/tel-security.html.

• ITU-T Security Manual, “Security in Telecommunications and Information Technology” at: http://www.itu.int/publ/T-HDB-SEC.03-2006/en

• “Security Guidance for ITU-T Recommendations”, available at: http://www.itu.int/ITU-T/studygroups/com17/tel-security.html.

• IT Baseline Protection Manual, COBIT, among others.

• Standards on testing, evaluating and certification of information systems and network security.

• Software Assurance Forum for Excellence in Code (ww.SAFECode.org).

• IT Association of America (ITAA)

• IT Information Sharing and Analysis Center (IT-SAC).

2.7.1. Appendix 1: Survey of Cybersecurity Technical Forums

Global

• International Telecommunication Union

• International Organization for Standardization

• Other global organizations

Regional

• European Commission

• European Telecommunication Standards Institute

• ENISA

• Other regional organizations

Other technical forums dealing with cybersecurity include

• International Corporation for Assigned Names and Numbers

• International Electrotechnical Commission

• IEEE

• Engineering Task Force

• W3C

• Alliance for Telecommunications Industry Solutions

• FCC

2.7.2. Appendix 2. Software development lifecycle

Concept: The initial phase of every software development lifecycle is to define the aim of the software, how users will interact with the product, and how it will relate to other products within the IT infrastructure. Product development managers assemble a team to develop a product.

Requirements: This phase translates the conceptual aspect of a product into measurable, observable and testable requirements. Developers tend to phrase these requirements as “the product shall…” and specify the functions to be provided, including degree of reliability, availability, maintainability and interoperability. The requirements phase should explicitly define functionality, as this affects subsequent programming, testing and management resources in the development process.

Design and Documentation: Efficient programming requires systematic specification of each requirement for a software application. This phase is more than an explicit, detailed description of product functionality - detailed design should enable near-final drafts of documentation to be produced to coincide with final release of the product.

Programming: This phase is where programmers translate the design and specification into actual code. Effective coding needs implementers to use consistent coding practices and standards throughout all aspects of production. Best practices for coding ensure that all programmers will implement similar functions in a similar manner. Programmers should be trained to ensure effective implementation of standards.

Testing, Integration and Internal Evaluation: This phase verifies and validates coding at each stage of the development process. It ensures that the concept is complete, that requirements are well-specified, and that test plans and documentation are comprehensive and consistently applied to all modules, subsystems, and integrated with the final product. Verification and validation should be carried out at each stage of development to ensure consistency of the application. Complex projects require testing and validation methodologies that anticipate potentially far-fetched circumstances, including duress testing.

Release: The application is made available for general use by customers. Before releasing the application, a software provider must ensure that the application meets product criteria. They must also identify delivery channels, train the sales team and meet orders. The application’s vendor support team must be able to respond to customer queries at the anticipated production volume.

Maintenance, Sustaining Engineering and Incident Response: These processes support released products. Applications must be updated with bug fixes, user interface enhancements or other modifications to improve the usability and performance of the product. Defects fixed in this phase should be merged into subsequent versions of code and further analysis may be necessary to mitigate the possibility of their recurrence in future versions or other products.

[2] ITU-T Recommendation X.805 (2003), Security architecture for systems providing end-to-end communications.

[3] ITU-T Recommendation X.800 (1991), “Information Processing Systems – Open Systems Interconnection – Basic Reference Model – Part 2: Security Architecture”.

[4]There are separate guidelines for users, operators, manufacturers and regulatory authorities in cyberspace.

[5]www.SAFECode.org

[6]An example of this is: http://www.itsc.org.sg/pdf/2ndmtg/Contributions.pdf.