Page 33 - AI Governance Day - From Principles to Implementation

P. 33

AI Governance Day - From Principles to Implementation

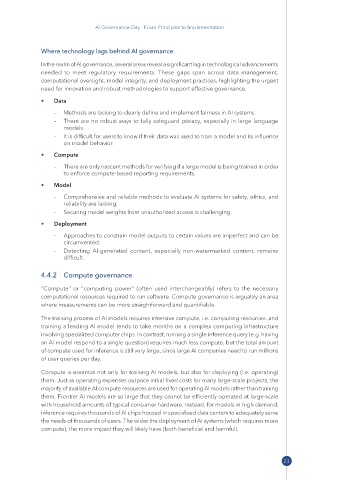

Where technology lags behind AI governance

In the realm of AI governance, several areas reveal a significant lag in technological advancements

needed to meet regulatory requirements. These gaps span across data management,

computational oversight, model integrity, and deployment practices, highlighting the urgent

need for innovation and robust methodologies to support effective governance.

• Data

– Methods are lacking to clearly define and implement fairness in AI systems.

– There are no robust ways to fully safeguard privacy, especially in large language

models.

– It is difficult for users to know if their data was used to train a model and its influence

on model behavior.

• Compute

– There are only nascent methods for verifying if a large model is being trained in order

to enforce compute-based reporting requirements.

• Model

– Comprehensive and reliable methods to evaluate AI systems for safety, ethics, and

reliability are lacking.

– Securing model weights from unauthorized access is challenging.

• Deployment

– Approaches to constrain model outputs to certain values are imperfect and can be

circumvented.

– Detecting AI-generated content, especially non-watermarked content, remains

difficult.

4.4.2 Compute governance

"Compute" or "computing power" (often used interchangeably) refers to the necessary

computational resources required to run software. Compute governance is arguably an area

where measurements can be more straightforward and quantifiable.

The training process of AI models requires intensive compute, i.e. computing resources. and

training a leading AI model tends to take months on a complex computing infrastructure

involving specialized computer chips. In contrast, running a single inference query (e.g. having

an AI model respond to a single question) requires much less compute, but the total amount

of compute used for inference is still very large, since large AI companies need to run millions

of user queries per day.

Compute is essential not only for training AI models, but also for deploying (i.e. operating)

them. Just as operating expenses outpace initial fixed costs for many large-scale projects, the

majority of available AI compute resources are used for operating AI models rather than training

them. Frontier AI models are so large that they cannot be efficiently operated at large-scale

with household amounts of typical consumer hardware. Instead, for models in high demand,

inference requires thousands of AI chips housed in specialized data centers to adequately serve

the needs of thousands of users. The wider the deployment of AI systems (which requires more

compute), the more impact they will likely have (both beneficial and harmful).

23