Page 84 - AI Standards for Global Impact: From Governance to Action

P. 84

AI Standards for Global Impact: From Governance to Action

pyramid. Lower-level controls can negatively impact higher-level capabilities, and higher-

level controls can make lower-level problems less relevant.

g) The AI robotic automation could be broken down into sensing, sense making, decision

making and acting, where useful controls could be implemented accordingly, like pre-

established thresholds, pre-determined authorities and clear lines of accountability.

h) It is the right time to start security standardization for multi-agent systems.

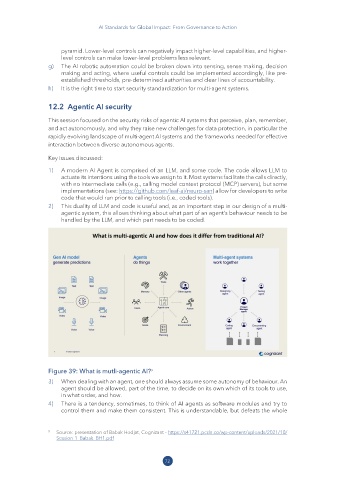

12�2 Agentic AI security

This session focused on the security risks of agentic AI systems that perceive, plan, remember,

and act autonomously, and why they raise new challenges for data protection, in particular the

rapidly evolving landscape of multi-agent AI systems and the frameworks needed for effective

interaction between diverse autonomous agents.

Key issues discussed:

1) A modern AI Agent is comprised of an LLM, and some code. The code allows LLM to

actuate its intentions using the tools we assign to it. Most systems facilitate the calls directly,

with no intermediate calls (e.g., calling model context protocol (MCP) servers), but some

implementations (see: https:// github .com/ leaf -ai/ neuro -san) allow for developers to write

code that would run prior to calling tools (i.e., coded tools).

2) This duality of LLM and code is useful and, as an important step in our design of a multi-

agentic system, this allows thinking about what part of an agent’s behaviour needs to be

handled by the LLM, and which part needs to be coded.

Figure 39: What is mutli-agentic AI? 9

3) When dealing with an agent, one should always assume some autonomy of behaviour. An

agent should be allowed, part of the time, to decide on its own which of its tools to use,

in what order, and how.

4) There is a tendency, sometimes, to think of AI agents as software modules and try to

control them and make them consistent. This is understandable, but defeats the whole

9 Source: presentation of Babak Hodjat, Cognizant - https:// s41721 .pcdn .co/ wp -content/ uploads/ 2021/ 10/

Session -1 _Babak _BH1 .pdf

72