Page 65 - AI for Good-Innovate for Impact

P. 65

AI for Good-Innovate for Impact

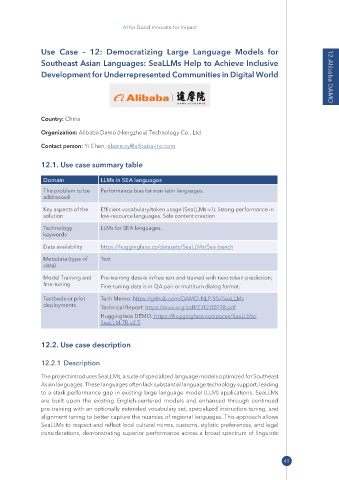

Use Case – 12: Democratizing Large Language Models for

Southeast Asian Languages: SeaLLMs Help to Achieve Inclusive

Development for Underrepresented Communities in Digital World 12-Alibaba DAMO

Country: China

Organization: Alibaba Damo (Hangzhou) Technology Co., Ltd.

Contact person: Yi Chen, elaine.cy@ alibaba -inc .com

12�1� Use case summary table

Domain LLMs in SEA languages

The problem to be Performance bias for non-latin languages.

addressed

Key aspects of the Efficient vocabulary/token usage (SeaLLMs-v1). Strong performance in

solution low-resource languages. Safe content creation

Technology LLMs for SEA languages.

keywords

Data availability https:// huggingface .co/ datasets/ SeaLLMs/ Sea -bench

Metadata (type of Text

data)

Model Training and Pre-training data is in free text and trained with next token prediction;

fine-tuning Fine-tuning data is in QA pair or multiturn dialog format.

Testbeds or pilot Tech Memo: https:// github .com/ DAMO -NLP -SG/ SeaLLMs

deployments Technical Report: https:// arxiv .org/ pdf/ 2312 .00738 .pdf

Huggingface DEMO: https:// huggingface .co/ spaces/ SeaLLMs/

SeaLLM -7B -v2 .5

12�2� Use case description

12�2�1 Description

The project introduces SeaLLMs, a suite of specialized language models optimized for Southeast

Asian languages. These languages often lack substantial language technology support, leading

to a stark performance gap in existing large language model (LLM) applications. SeaLLMs

are built upon the existing English-centered models and enhanced through continued

pre-training with an optionally extended vocabulary set, specialized instruction tuning, and

alignment tuning to better capture the nuances of regional languages. This approach allows

SeaLLMs to respect and reflect local cultural norms, customs, stylistic preferences, and legal

considerations, demonstrating superior performance across a broad spectrum of linguistic

49