Page 114 - ITU Journal, ICT Discoveries, Volume 3, No. 1, June 2020 Special issue: The future of video and immersive media

P. 114

ITU Journal: ICT Discoveries, Vol. 3(1), June 2020

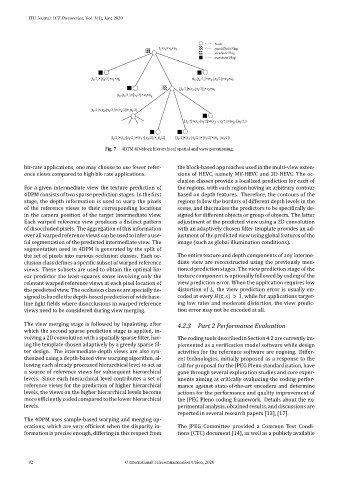

Fig. 7 – 4DTM 4D-block hierarchical spatial and view partitioning.

bit-rate applications, one may choose to use fewer refer- the block-based approaches used in the multi-view exten-

ence views compared to high bit-rate applications. sions of HEVC, namely MV-HEVC and 3D-HEVC. The oc-

clusion classes provide a localized prediction for each of

For a given intermediate view the texture prediction of the regions, with each region having an arbitrary contour

4DPM consists of two sparse prediction stages. In the first based on depth features. Therefore, the contours of the

stage, the depth information is used to warp the pixels regions follow the borders of different depth levels in the

of the reference views to their corresponding locations scene, and this makes the predictors to be specifically de-

in the camera position of the target intermediate view. signed for different objects or group of objects. The latter

Each warped reference view produces a distinct pattern adjustment of the predicted view using a 2D convolution

of disoccluded pixels. The aggregation of this information with an adaptively chosen filter template provides an ad-

over all warped reference views can be used to infer a use- justment of the predicted view using global features of the

ful segmentation of the predicted intermediate view. The image (such as global illumination conditions).

segmentation used in 4DPM is generated by the split of

the set of pixels into various occlusion classes. Each oc- The entire texture and depth components of any interme-

clusion class defines a specific subset of warped reference diate view are reconstructed using the previously men-

views. These subsets are used to obtain the optimal lin- tioned prediction stages. The view prediction stage of the

ear predictor the least-squares sense involving only the texture component is optionally followed by coding of the

relevant warped reference views at each pixel location of view prediction error. When the application requires low

the predicted view. The occlusion classes are specially de- distortion of , the view prediction error is usually en-

signed to handle the depth-based prediction of wide base- coded at every ( , ) > 1, while for applications target-

line light fields where disocclusions in warped reference ing low rates and moderate distortion, the view predic-

views need to be considered during view merging. tion error may not be encoded at all.

The view merging stage is followed by inpainting, after 4.2.3 Part 2 Performance Evaluation

which the second sparse prediction stage is applied, in-

volving a 2D convolution with a spatially sparse filter, hav- The coding tools described in Section 4.2 are currently im-

ing the template chosen adaptively by a greedy sparse fil- plemented as a verification model software while design

ter design. The intermediate-depth views are also syn- activities for the reference software are ongoing. Differ-

thesized using a depth-based view warping algorithm, al- ent technologies, initially proposed as a response to the

lowing each already processed hierarchical level to act as call for proposal for the JPEG Pleno standardization, have

a source of reference views for subsequent hierarchical gone through several exploration studies and core exper-

levels. Since each hierarchical level contributes a set of iments aiming at critically evaluating the coding perfor-

reference views for the prediction of higher hierarchical mance against state-of-the-art encoders and determine

levels, the views on the higher hierarchical levels become actions for the performance and quality improvement of

more efficiently coded compared to the lower hierarchical the JPEG Pleno coding framework. Details about the ex-

levels. perimental analysis, obtained results, and discussions are

reported in several research papers [13], [17].

The 4DPM uses sample-based warping and merging op-

erations, which are very efficient when the disparity in- The JPEG Committee provided a Common Test Condi-

formation is precise enough, differing in this respect from tions (CTC) document [14], as well as a publicly available

92 © International Telecommunication Union, 2020